When different systems, applications or organisations need to communicate with each other and actually understand what is being said, interoperability is key. It enables a hospital’s software to communicate with an insurance company, for example, or one vendor’s inventory system to synchronise with another’s logistics platform.

When different systems, applications or organisations need to communicate with each other and actually understand what is being said, interoperability is key. It enables a hospital’s software to communicate with an insurance company, for example, or one vendor’s inventory system to synchronise with another’s logistics platform.

At the heart of many of these data exchanges is XML.

XML (Extensible Markup Language) may not be new or flashy, but it remains one of the most powerful tools for achieving reliable, structured interoperability across diverse platforms.

Why is interoperability so hard?

Systems are built using different programming languages, data models and communication protocols. Without a shared format or structure, exchanging data can result in a complex web of custom APIs, ad hoc conversions, and manual adjustments.

To get systems working together seamlessly, you need:

- A standardised structure for data.

- A way to validate that structure.

- A format that is language-agnostic and platform-neutral.

XML ticks all these boxes.

How XML enables interoperability

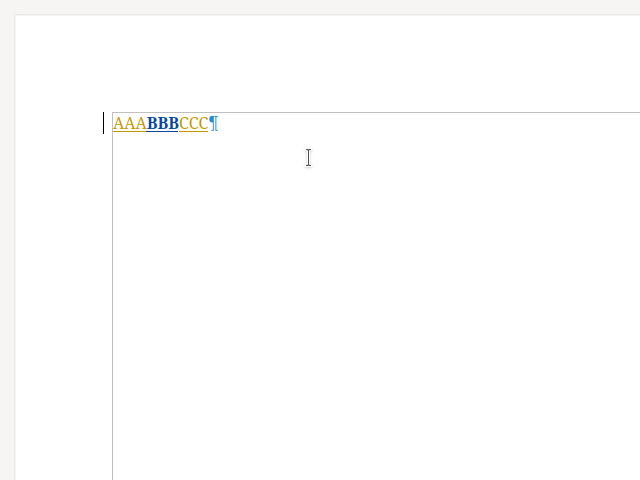

1. Self-describing structure

XML uses tags to clearly label data:

<customer>

<name>Maria Ortega</name>

<id>87234</id>

</customer>

This means that a receiving system doesn’t have to guess what each field means, as it is explicitly defined. This reduces the risk of misinterpretation and supports automated parsing.

2. Schema validation

Using XSD (XML Schema Definition) or DTD (Document Type Definition), you can define the rules that an XML document must adhere to, such as which elements are required, which data types are valid and what the structure must be.

This is critical for:

- verifying incoming data

- preventing malformed or incomplete exchanges

- ensuring consistency across multiple systems

3. Namespaces for avoiding collisions

XML namespaces prevent tag name conflicts when data from different sources is combined.

<doc xmlns:h=”http://www.w3.org/TR/html4/” xmlns:f=”http://www.w3schools.com/furniture”>

<h:table>…</h:table>

<f:table>…</f:table>

</doc>

Without namespaces, systems could misinterpret elements with the same name but different meanings.

4. Cross-platform compatibility

XML is plain text. Any system that can read a file can read it, whether it’s written in Java, .NET, Python or COBOL. This makes it ideal for long-term data exchange and integration between legacy and modern systems.

XML in real-world interoperability

Healthcare: HL7 CDA/FHIR

Hospitals, clinics, insurance providers and pharmacies rely on XML-based formats to exchange clinical records, billing data and prescriptions. HL7’s CDA (Clinical Document Architecture) is a strict XML schema that is used worldwide.

In government, XML is used for e-government forms and tax data.

Tax filings, business registrations and compliance documents are often submitted in XML format. This ensures consistent structure across various jurisdictions and software vendors.

Publishing: DITA and JATS

XML standards are used for modular content creation and journal publishing to allow interoperability between authors, editors, publishers, and archive systems, even if they are using different tools.

Finance: XBRL

XBRL (eXtensible Business Reporting Language) uses XML to standardise financial reports, enabling regulators, investors and analysts to automatically process and compare data from thousands of companies.

Summary

Interoperability isn’t just about convenience. It’s about accuracy, consistency and trust. XML’s rigidity helps to enforce that trust.

XML may not be trendy, but it remains the backbone of system-to-system interoperability. Its structured format, validation tools and long track record make it essential wherever precision and compatibility are non-negotiable.

If your systems need to communicate reliably and seamlessly across platforms, XML is one of the best languages they can use.

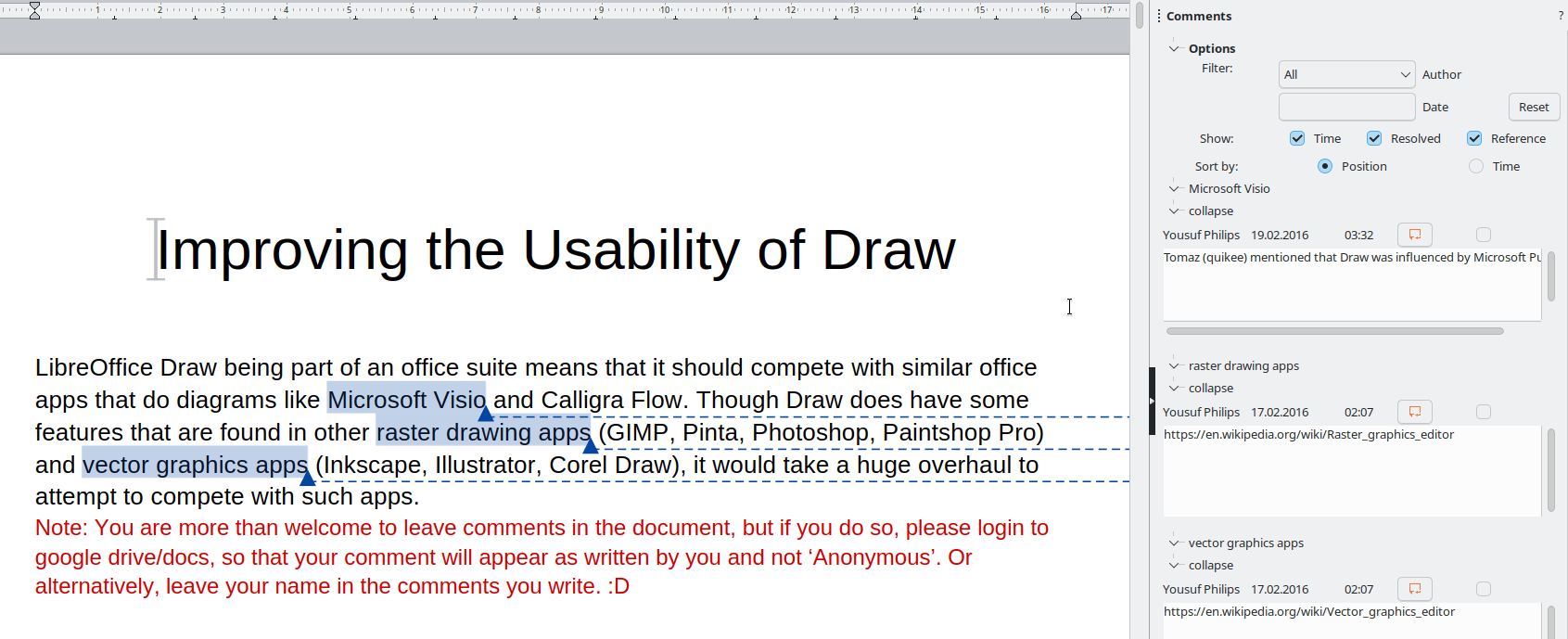

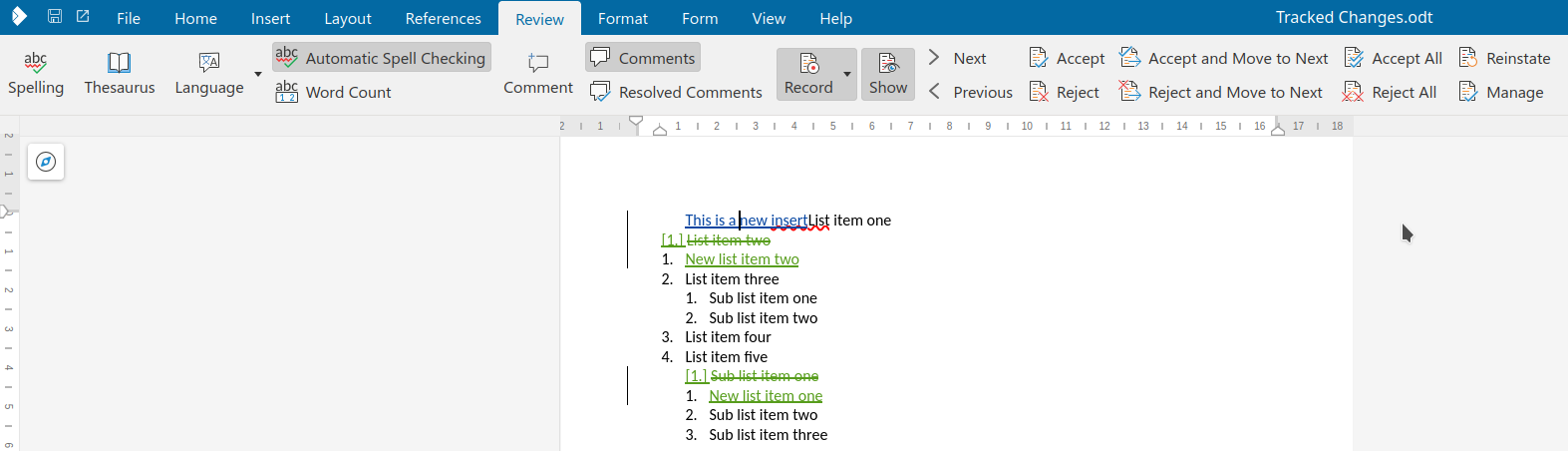

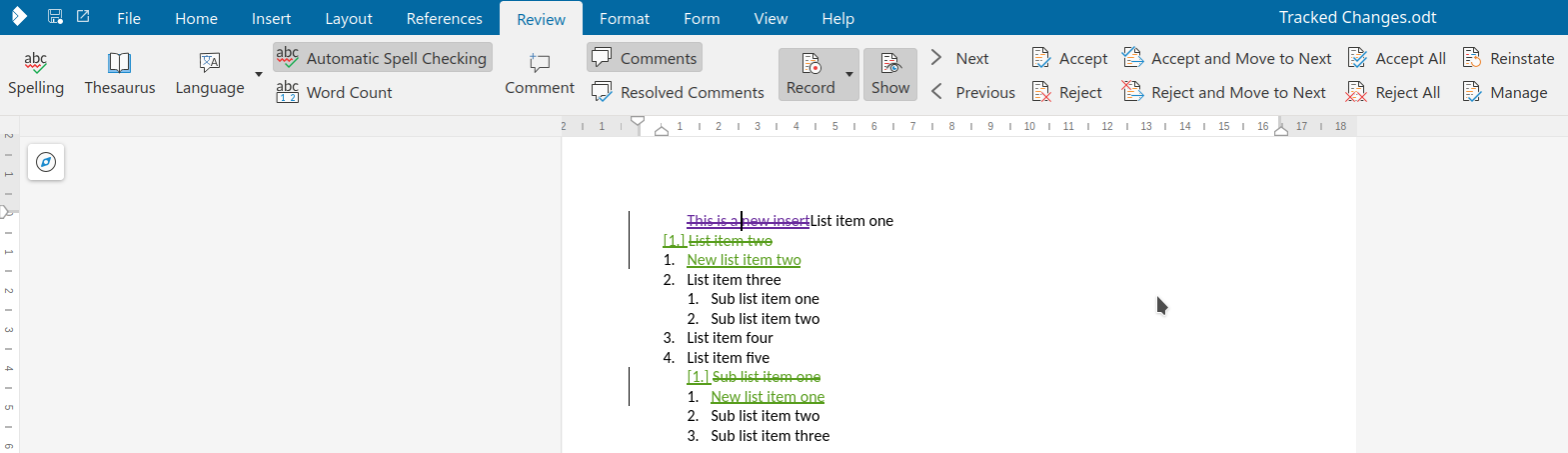

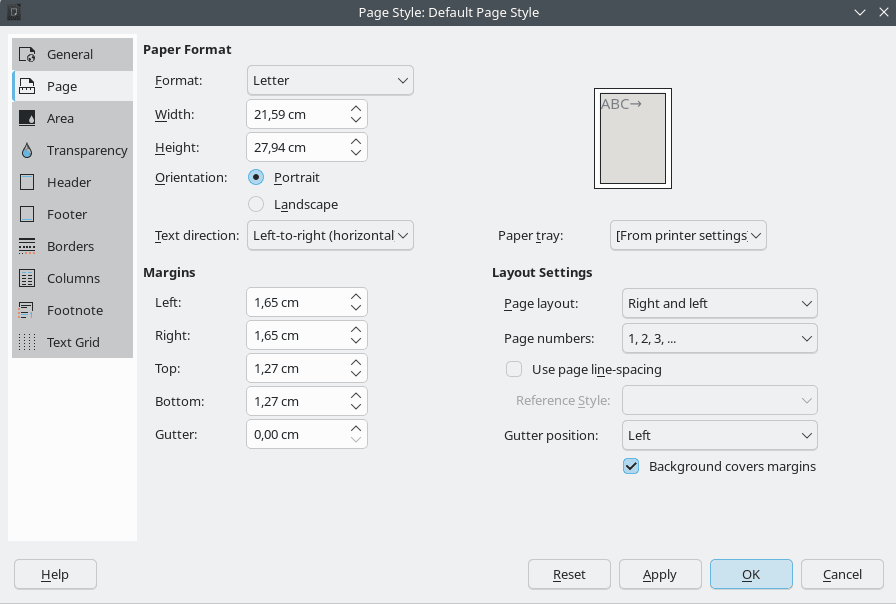

These user guides are the ultimate reference for anyone using LibreOffice — whether at home, at work, or at school. From spreadsheets to presentations, from text documents to complex equations: it’s all covered, clearly and accessibly.

These user guides are the ultimate reference for anyone using LibreOffice — whether at home, at work, or at school. From spreadsheets to presentations, from text documents to complex equations: it’s all covered, clearly and accessibly. The work is 100% community-driven! Jean Weber led the Writer guide, Peter Schofield coordinated the Impress, Draw, and Math guides, and Olivier Hallot headed the Calc guide.

The work is 100% community-driven! Jean Weber led the Writer guide, Peter Schofield coordinated the Impress, Draw, and Math guides, and Olivier Hallot headed the Calc guide.

Each new edition is more than just an update — it’s a chance to improve clarity, add the latest features, and deliver the best experience possible for end users. These guides complement the built-in LibreOffice Help and are perfect for deepening your knowledge.

Each new edition is more than just an update — it’s a chance to improve clarity, add the latest features, and deliver the best experience possible for end users. These guides complement the built-in LibreOffice Help and are perfect for deepening your knowledge. The guides are available now for free download in PDF, ODT (OpenDocument format), and HTML (for online reading). And soon, you’ll be able to order printed copies via LuLu Inc.

The guides are available now for free download in PDF, ODT (OpenDocument format), and HTML (for online reading). And soon, you’ll be able to order printed copies via LuLu Inc.

Get your guides now:

Get your guides now: